Evaluating programs is crucial for sport organizations. For example, evaluation can be used to demonstrate the impact of programs to funding agencies (like Sport Canada) or inform changes in programming to better serve sport participants. However, many sport organizations lack the time, money, or knowledge and experience to effectively undertaken evaluation work (Mitchell & Berlan, 2016; Lovell et al., 2016).

With the aim of filling a sector-wide gap in evaluation capacity and improving our internal evaluation practices, the Sport Information Resource Centre (SIRC) partnered with Corliss Bean, PhD, CE, a researcher and evaluation expert from Brock University. Together, we formed a research partnership focused on delivering a knowledge translation intiative intended to increase evaluation knowledge and capacity among non-profit sport organizations in Canada.

In this SIRCuit article, we’ll describe our partnership and our process for planning, delivering and evaluating our knowledge translation initiative. We’ll also provide tips and learnings for organizations looking to engage in research partnerships.

This SIRCuit article is based on an academic article coauthored by SIRC and Corliss Bean. Download the article here.

Developing the partnership

In 2020, SIRC engaged Corliss Bean, PhD, CE, to facilitate a 2-part evaluation workshop for SIRC staff members. The workshop allowed us to reflect on our evaluation practices and identify ways in which they could be improved. Through this workshop and discussions with other sport organizations, we realized that there was a need for more evaluation education in the sport sector, as many organizations did not have the knowledge or capacity to perform meaningful evaluations of their initiatives.

To address this need, we formed a research partnership. Research partnerships are when researchers and community stakeholders or organizations come together to work towards a common goal that will benefit research and practice (Holt et al., 2018; Kendellen et al., 2017). The goals of our partnership were to:

- Co-host a webinar series focused on teaching evaluation skills that would fill key knowledge gaps and improve evaluation capacity in the sport sector.

- Co-create resources (for example, worksheets and videos) that would complement the webinar series to facilitate knowledge uptake and enhance the reach of the initiative.

- Co-evaluate the webinar series and resources so that we could learn about what worked well and what could be improved.

- Build SIRC’s evaluation capacity so that we can increase our knowledge, improve our programs and better serve Canada’s sport sector.

Partnership principles

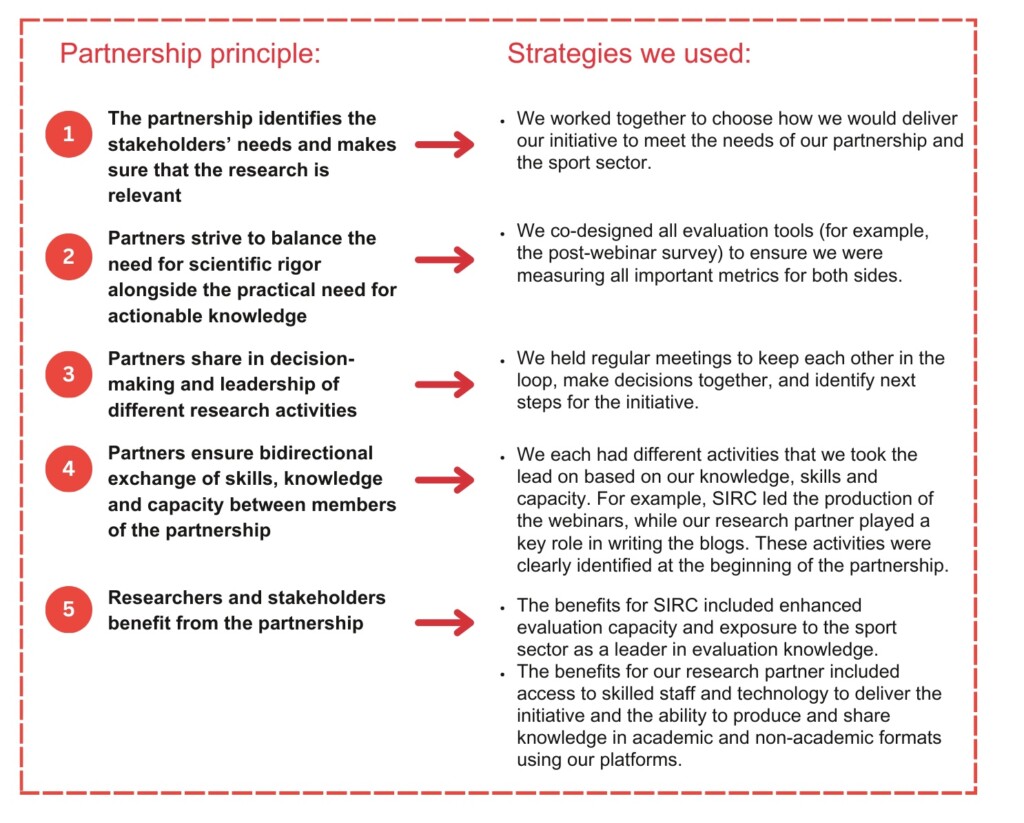

To maintain a positive relationship and engage meaningfully with one another, we developed a set of principles to guide our research partnership. These principles were drawn from a list generated based on a synthesis of the partnership literature (Hoekstra et al., 2020). Together with our research partner, Corliss Bean, we identified and agreed upon the principles that both partners felt would contribute to a successful partnership. A list of these principles and examples of the strategies we used to uphold each principle is below.

Guiding the process

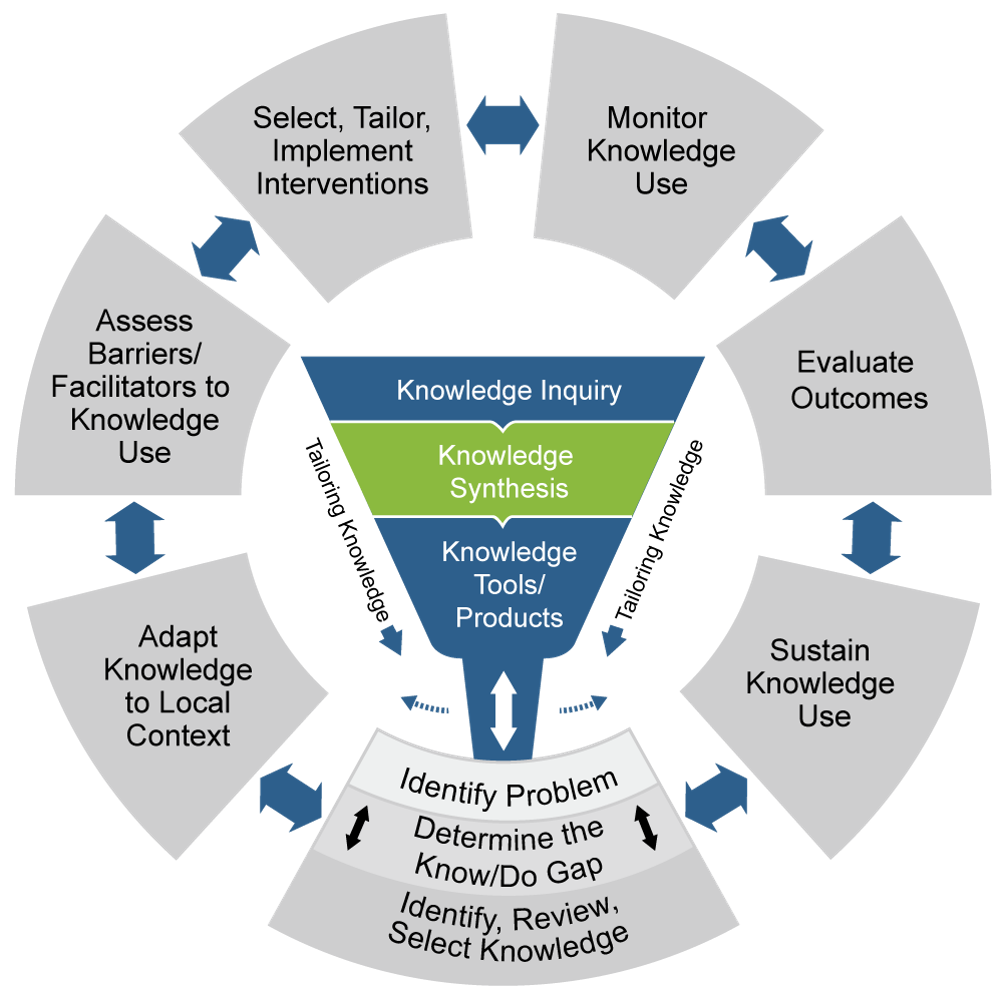

For initiatives to have an impact, they must be thoughtfully designed with the needs of the knowledge users (in our case, sport administrators) in mind. To ensure that our initiative would have an impact, we guided its planning, delivery and evaluation using the Knowledge to Action Framework (KTA; Graham et al., 2006).

The KTA framework is comprised of 2 processes. The first process is the Knowledge Creation Funnel, which you can see in the centre of Figure 1. The knowledge creation funnel describes the process of creating new knowledge and synthesizing it into key messages that can be shared in the form of tools and products. The second process is the Action Cycle, which you can see on the outer ring of Figure 1. The Action Cycle describes the process of designing implementing, and evaluating evidence-informed resources, programs or initiatives to sustain knowledge use in practice.

Our initiative was guided primarily by the Action Cycle. Below, we describe how we used the stages of the Action Cycle to inform the planning, delivery and evaluation of our initiative. These steps may serve as a useful blueprint for guiding your next knowledge translation project.

Step 1: Identify the problem and determine the knowledge-to-action gap

We identified a need to improve our internal evaluation capacity and engaged a researcher with relevant expertise to help us. Recognizing a broader sector-wide gap in evaluation knowledge and capacity, we formed a research partnership to help close this gap.

Step 2: Select knowledge and adapt it to the local context

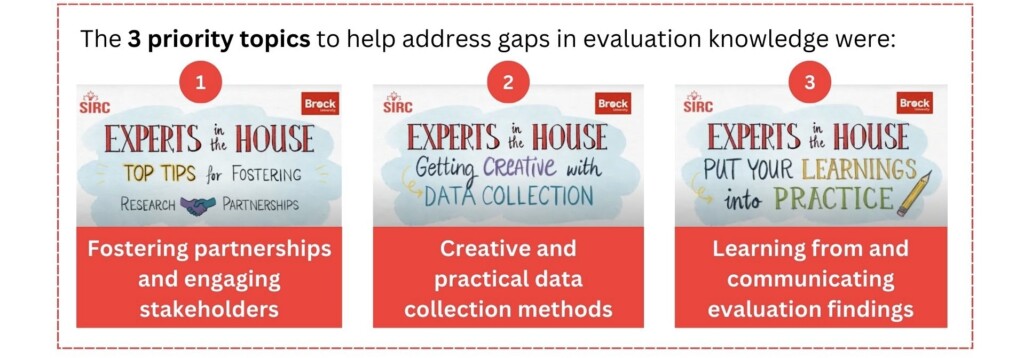

As a partnership, we reviewed the evaluation literature in the non-profit and sport contexts to see what had previously been done. We considered our own experiences with evaluation and reached out to other organizations to learn about their needs. Together we identified 3 priority topics reflecting key gaps in non-profit and sport sector evaluation knowledge.

Step 3: Assess barriers and facilitators to knowledge use

We used the findings of our literature review and our first-hand experiences to identify some of the key reasons why organizations were not performing evaluation. Common barriers to evaluation practice in non-profit sport organizations included:

- A lack of staff experience or training

- A lack of operationalized resources

- Limited financial resources

By understanding what was stopping organizations from performing evaluation, we could better design resources to help them overcome these barriers.

Step 4: Select, tailor and implement interventions

We planned to deliver webinars as the main component of our initiative because of the success of SIRC’s Experts in the House webinar series. Webinars are geographically and financially accessible to a wide range of people, can host many participants at once, and can be recorded and shared at a later date. To complement the webinars, we developed and shared blog posts, videos, and templates. These resources repackaged the knowledge shared through the webinar series in diverse formats to support learning and future application of knowledge. We hosted these resources in a free, easily accessible online toolkit on SIRC’s webpage. The webinar series and complementary resources helped to overcome barriers including limited financial resources and evaluation resources, and provided an opportunity for participants to enhance their evaluation knowledge in our 3 priority areas.

Steps 5 and 6: Monitor knowledge use and evaluate the outcomes

We monitored how the knowledge shared through our initiative was being used and evaluated its impact using a tool called the RE-AIM Framework (Glasgow et al., 1999). Regular monitoring and evaluation (for example, after each webinar) provides an important opportunity to see what is or is not working and make adjustments to improve outcomes. Definitions for each dimension of the RE-AIM Framework and examples of the indicators we evaluated for each dimension are outlined below.

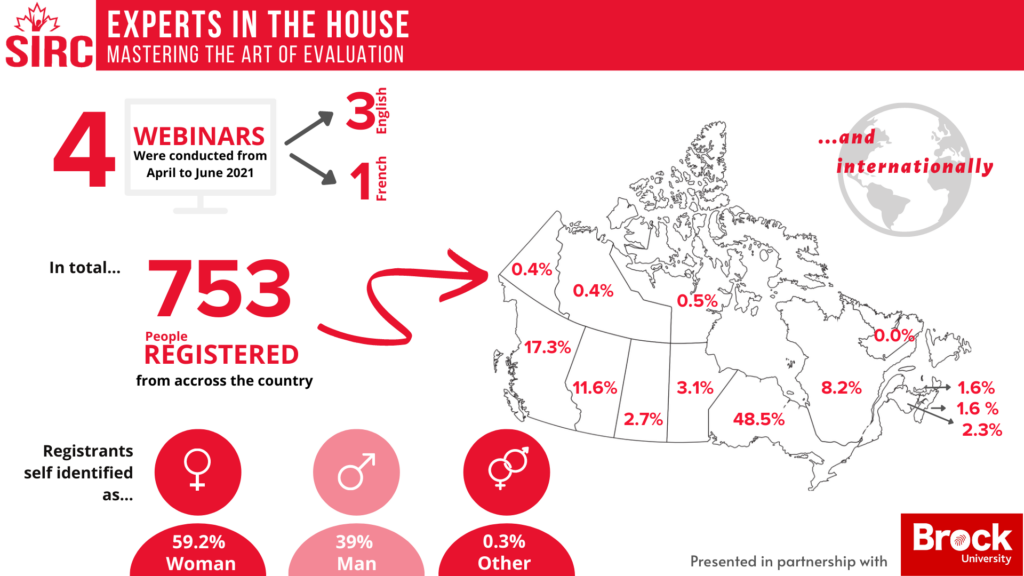

- Reach refers to the number, proportion, and representativeness of individuals who are affected by the initiative. We measured reach using webinar registration forms and social media analytics. For example, we assessed the number of registrations, demographic characteristics of people who registered (such as their role in sport, occupation, and province or territory of residence), and the number of people who viewed webinar and resource promotions on social media.

- Effectiveness refers to the impact of the initiative on positive and negative outcomes. We chose to evaluate changes in evaluation knowledge, confidence to engage in evaluation practices, and intentions to apply learnings about each evalution topic. We measured these outcomes using a survey that was sent to participants immediately following each webinar. Results showed that the webinar series was largely effective: 86% of respondents reported an increase in knowledge, 73% reported an increase in confidence, and 78% planned to apply what they learned. Beyond the webinar series, we tracked the number of blog, video, and resource views on SIRC’s webpage as a general indicator of knowledge uptake.

- Adoption refers to the number, proportion, and representativeness of settings and staff who are willing to participate in the initiative. For our initiative, we measured the number of attendees and the webinar attendance rate (the proportion of people who registered who actually attended) using Zoom attendee reports. Our attendance rate averaged 51% across the webinar series, falling in line with the industry standard for virtual events (Bennet, 2023).

- Implementation refers to the extent to which the initiative was delivered as planned. Indicators of implementation included the planned versus actual number of webinars hosted and resources created, as well as participant feedback (collected via the post-event survey) about what they liked and what could be improved. Overall, the initiative was delivered according to plan. However, participants suggested including more time for question and answer periods and encouraged the webinar speakers to include more practical takeaways in their presentations. We used the feedback collected after each webinar to improve the delivery of later webinars in the series.

- Maintenance refers to the long-term impact of the initiative on participant outcomes (beyond 6 months), as well as the extent to which the initiative becomes part of organizational practice. We continue to monitor and evaluate maintenance by tracking the number of blog, video, and resource views on SIRC’s webpage over time.

Steps 7: Sustain knowledge use

The steps of the action cycle are ultimately intended to lead to the sustained application of knowledge. We continue to encourage knowledge use by sharing the resources we created with the sport sector at regular intervals through SIRC’s daily newsletter and social media channels. The SIRC team also continues to apply the knowledge and skills gained through this partnership as well as our co-created tools and templates in our everyday evaluation practices.

Final thoughts and key learnings

This research partnership provided both partners with the resources and capacity to develop and deliver a webinar series and online resources to help enhance evaluation capacity in Canada’s sport sector. Keys to the success of this partnership included using the KTA and RE-AIM Frameworks to intentionally plan and evaluate our initiative from beginning to end, as well as identifying and agreeing upong a set of principles to guide our partnership from the outset.

Are you in the process of developing a research partnership to support knowledge translation? Here are 3 tips to help you get started:

- Create your initiatives with knowledge users in mind : Leverage your experiences and communicate with knowledge users to guide the development of your initiatives. Make sure you are engaging knowledge users early in the process to ensure that your initiatives will meet their needs and reduce the barriers they are facing to knowledge use.

- Evaluation is key : Take time at the beginning of your project to plan out how you will evaluate each stage of your initiative and be sure to communicate with your partner to identify what you need to measure (for example, funder requirements) to assess the impact of your program. Meaningful evaluation takes time and planning. For us, the RE-AIM and KTA Frameworks were useful tools to help guide our evaluation.

- Play to your strengths : At the beginning of your partnership, discuss what each group will bring to the table and identify who will take “ownership” of each role and activity with support from all sides. Playing to each other’s strengths and communicating regularly around needs helps make partnerships successful.